Manually cleaning data is challenging because it is time-consuming, prone to human error, and difficult to scale as data volume increases. While it may work for small datasets, manual data cleaning becomes inefficient and unreliable for large or complex data.

Data cleaning involves fixing errors in raw data, such as removing duplicates, handling missing values, correcting incorrect entries, and standardizing formats. Clean data is essential for accurate analysis, reporting, and decision-making.

Many businesses and analysts still use manual data cleaning because it is easy to start and requires no advanced tools. Spreadsheet software like Excel or Google Sheets allows quick edits and full control, making manual methods suitable for small projects or one-time tasks.

However, as datasets grow, manual data cleaning creates serious challenges. Repetitive work slows teams down, inconsistencies appear when multiple people clean the same data, and mistakes become harder to detect. These issues lead to wasted time, inaccurate insights, and poor business decisions based on unreliable data.

What Is Manual Data Cleaning?

Manual data cleaning is the process of reviewing and fixing data by hand to make it accurate, complete, and ready for use. Instead of using automated tools or scripts, a person manually checks the data and makes corrections based on predefined rules or personal judgment. This approach is usually used when datasets are small or when quick fixes are needed.

Common tasks involved in manual data cleaning include:

Removing duplicates: Identifying and deleting repeated records that can distort analysis and reports.

Fixing missing values: Filling in empty cells, replacing them with correct values, or deciding whether to remove incomplete records.

Standardizing formats: Making data consistent, such as using the same date format, text case, or currency style across the dataset.

Correcting incorrect entries: Fixing spelling mistakes, wrong numbers, or mismatched data that occurred during data entry or collection.

Manual data cleaning is most commonly done using tools like Excel and Google Sheets, where users rely on filters, formulas, and manual checks. It is also used in small databases or during early data analysis stages, where the data volume is manageable and automation is not yet required.

Why Data Cleaning Is a Critical Step in Data Analysis

Data cleaning is a critical step in data analysis because the quality of insights depends directly on the quality of data. Even the most advanced tools or models cannot produce accurate results if the underlying data is incomplete, inconsistent, or incorrect. Clean data ensures that analysis is reliable, meaningful, and actionable.

Importance of Clean Data for Reporting

Accurate reporting relies on clean and consistent data. If reports are built on duplicate records, missing values, or incorrect entries, the results can be misleading. Poor-quality data can lead to incorrect KPIs, flawed dashboards, and a loss of trust in reports among stakeholders.

Importance of Clean Data for Machine Learning

Machine learning models are highly sensitive to data quality. Dirty data can introduce bias, reduce model accuracy, and produce unreliable predictions. Missing values, outliers, or inconsistent formats can negatively affect training and testing, making data cleaning an essential step before any model development.

Importance of Clean Data for Business Decisions

Businesses use data to make decisions related to pricing, marketing, operations, and strategy. If the data is inaccurate, decisions based on it can lead to financial losses, missed opportunities, and poor customer experiences. Clean data helps leaders make confident, data-driven decisions.

Industry research clearly highlights the cost of poor data quality. According to an IBM study, poor-quality data costs businesses trillions of dollars globally every year due to inefficiencies, rework, and incorrect decisions. This shows why data cleaning is not optional. it is a foundational requirement for any data-driven organization.

For further reading, you can refer to research and insights published by IBM and Gartner, both of which consistently emphasize the importance of data quality in analytics, AI, and enterprise decision-making.

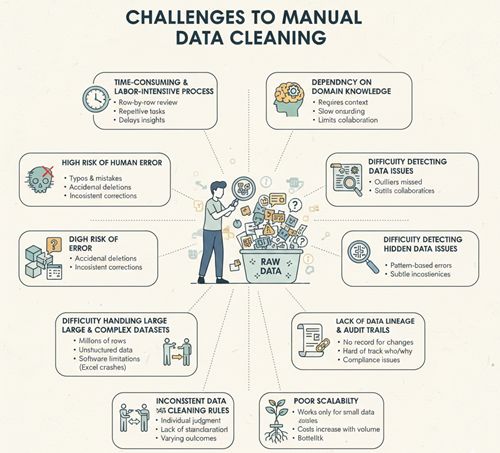

Manual data cleaning becomes challenging mainly because it depends heavily on human effort. As data grows in size and complexity, tasks that were once manageable turn slow, repetitive, and difficult to control. This creates delays, increases the chance of errors, and makes manual methods unsuitable for modern data needs.

Time-Consuming and Labor-Intensive Process

Manual data cleaning requires people to carefully review and fix data row by row. When datasets contain thousands or even millions of records, this process takes a significant amount of time and effort. Every duplicate, missing value, or incorrect entry must be identified and corrected manually, which slows down the entire workflow.

Many data cleaning tasks are also highly repetitive. Teams often perform the same actions again and again, filtering data, checking formats, and validating entries. Over time, this repetition leads to fatigue, reduced focus, and a higher risk of mistakes, further impacting productivity.

Manual data cleaning is also not practical for real-time or frequently updated data. Since humans cannot keep up with continuous data inflow, errors remain unresolved, and insights are delayed. This makes manual cleaning unsuitable for businesses that rely on timely reports, live dashboards, or fast decision-making.

High Risk of Human Error

Manual data cleaning carries a high risk of human error because every change depends on individual attention and judgment. Simple mistakes such as typos can easily occur while editing large datasets, especially when values are entered or corrected repeatedly.

Another common issue is accidental deletions. While removing duplicates or incorrect records, valid data can be mistakenly deleted, leading to incomplete or misleading datasets. These errors are often difficult to detect once the changes are saved.

Manual cleaning also results in inconsistent corrections, particularly when multiple people work on the same data. One person may apply different rules or formats than another, creating inconsistency across the dataset.

As datasets grow larger, these errors multiply quickly. A small mistake repeated across thousands of rows can significantly impact analysis, reports, and business decisions, making manual data cleaning unreliable at scale.

Difficulty Handling Large and Complex Datasets

Manually cleaning data becomes extremely difficult when datasets grow large. Millions of rows are impossible to review manually, as checking each record one by one would take an unrealistic amount of time. Even small errors can go unnoticed, simply because the data volume is too large for human review.

The challenge increases further with unstructured and semi-structured data, such as text files, logs, emails, or mixed-format records. This type of data does not follow a fixed structure, making it hard to apply consistent cleaning rules manually. Identifying patterns, inconsistencies, or hidden errors in such data requires advanced logic that manual methods cannot handle effectively.

In addition, spreadsheets struggle with performance when handling large datasets. Tools like Excel and Google Sheets may become slow, freeze, or crash when working with heavy data files. This limits productivity and increases the risk of data loss, making manual data cleaning unsuitable for large-scale or complex data environments.

Inconsistent Data Cleaning Rules

Manual data cleaning often suffers from inconsistent rules because it relies on individual judgment rather than predefined standards. Different people apply different logic when cleaning the same dataset, especially when clear guidelines are missing. One person may remove certain values, while another may keep them, leading to uneven results.

The lack of standardization makes it difficult to maintain data quality over time. Formats, naming conventions, and correction methods may change depending on who is working on the data. This inconsistency creates confusion and reduces trust in the dataset.

As a result, outcomes vary across teams and projects. The same data can produce different insights when cleaned differently, making it hard to compare reports or reuse data reliably. This variation limits collaboration and weakens the overall effectiveness of data analysis.

Poor Scalability

Manual data cleaning works reasonably well only for small datasets where the volume of data is limited and manageable. In such cases, reviewing and fixing data by hand may not take much time or effort.

However, this approach breaks down as data volume increases. As more data is collected, the time and effort required to clean it manually grow rapidly. What once took hours can turn into days or weeks, causing delays in analysis and reporting.

To keep up with growing data, organizations often end up hiring more people instead of improving systems. This increases operational costs without truly solving the problem. Since manual processes do not scale efficiently, they become a long-term bottleneck rather than a sustainable solution.

Lack of Data Lineage and Audit Trails

Manual data cleaning usually does not maintain a proper record of changes. There is often no clear history of what was modified, when it was changed, or what the original value was. Once edits are saved, earlier versions of the data may be lost.

It also becomes hard to track who changed what and why, especially when multiple people work on the same file. Without logs or version control, identifying the source of an error or reversing a mistake is difficult.

This lack of transparency creates compliance and trust issues. In regulated industries, teams must show how data was handled and modified. When manual processes cannot provide audit trails or data lineage, it raises concerns about data reliability and accountability.

Difficulty Detecting Hidden Data Issues

Manual data cleaning makes it hard to identify issues that are not immediately visible. Outliers are often missed, especially in large datasets, because unusual values can blend in and escape manual checks. These outliers can heavily influence analysis and distort results if left uncorrected.

Another challenge is that pattern-based errors go unnoticed. Issues such as repeated incorrect entries, inconsistent naming patterns, or systematic data entry mistakes are difficult to spot without automated rules or comparisons. Manual reviews typically focus on obvious errors rather than underlying patterns.

Without predefined rules or analytical models, many inaccuracies remain undetected. Human review alone cannot consistently identify complex relationships or subtle inconsistencies in data, making manual data cleaning unreliable for ensuring high data quality.

Dependency on Domain Knowledge

Manual data cleaning heavily depends on deep domain knowledge. To decide whether a value is correct or incorrect, the person cleaning the data must understand the context, meaning, and business rules behind it. Without this understanding, valid data may be changed or important errors may be overlooked.

This creates challenges when new team members are involved. They often struggle to interpret the data correctly, as undocumented rules and assumptions are not always clear. As a result, they require more guidance and supervision during the cleaning process.

This dependency also slows onboarding and collaboration. Teams spend extra time explaining data logic instead of working efficiently, and collaboration becomes harder when only a few individuals fully understand how the data should be cleaned.

Not Suitable for Real-Time or Streaming Data

Manual data cleaning cannot keep up with real-time or continuously generated data. Since the process depends on human intervention, it is impossible to clean data as it flows in from live sources such as applications, sensors, or user activity.

This limitation causes delays in reporting and insights. Data must be collected first and cleaned later, which slows down dashboards, analytics, and decision-making. By the time the data is ready, it may already be outdated.

Manual methods are also not compatible with modern data pipelines, which rely on automation, speed, and consistency. Today’s data systems are designed to process and analyze data continuously, making manual data cleaning an impractical approach for real-time environments.

Common Problems Faced During Manual Data Cleaning

Manual data cleaning often leads to recurring issues that affect data accuracy and reliability. These problems are common across industries and are difficult to fully eliminate when data is handled by hand.

Duplicate records: Repeated entries inflate data size and distort analysis, often occurring due to multiple data sources or repeated data entry.

Missing or null values: Empty fields reduce data completeness and can lead to incorrect calculations or misleading results.

Inconsistent formats: Variations in dates, currencies, text cases, or units make data hard to analyze and compare.

Incorrect or outdated data: Old or wrong information remains in datasets, leading to inaccurate insights and poor decisions.

Mismatched entries across sources: Data collected from different systems may not align properly, causing conflicts and inconsistencies.

These issues highlight why manual data cleaning becomes increasingly difficult as data volume and complexity grow.

Manual Data Cleaning vs Automated Data Cleaning

The difference between manual and automated data cleaning becomes clear when comparing efficiency, accuracy, and scalability. While manual methods may work for small tasks, automated data cleaning is better suited for modern, data-heavy environments.

| Aspect | Manual Data Cleaning | Automated Data Cleaning |

|---|---|---|

| Speed | Slow due to human effort and repetition | Fast with rule-based and automated processes |

| Accuracy | Error-prone and inconsistent | Consistent and reliable |

| Scalability | Poor, works only for small datasets | High, handles large and growing data volumes |

| Cost | High due to ongoing labor requirements | Lower over time after setup |

| Auditability | Limited or no change tracking | Strong audit trails and logs |

This comparison clearly shows why organizations increasingly prefer automated data cleaning as their data needs grow.

Best Practices to Reduce Challenges in Manual Data Cleaning

While manual data cleaning has limitations, following best practices can help reduce errors and improve efficiency. These steps make the process more structured and less risky, especially for small datasets.

Use predefined rules and checklists: Set clear guidelines for handling duplicates, missing values, and formats to ensure consistency across the dataset.

Maintain documentation: Record data definitions, cleaning rules, and assumptions so everyone understands how the data should be handled.

Validate changes: Double-check cleaned data by comparing it with the original dataset to catch mistakes early.

Limit dataset size: Apply manual cleaning only to small or sample datasets where human review is practical.

Combine with semi-automation tools: Use formulas, filters, or basic scripts to assist manual work and reduce repetitive tasks.

Following these practices helps minimize risks and makes manual data cleaning more manageable, though it remains unsuitable for large-scale data operations.

Tools That Help Reduce Manual Data Cleaning Effort

Using the right tools can significantly reduce the effort and errors involved in manual data cleaning. These tools do not fully eliminate manual work, but they help automate repetitive tasks and improve consistency.

Excel advanced functions: Features like Power Query, conditional formatting, pivot tables, and lookup formulas help clean and organize data more efficiently.

Google Sheets formulas: Functions such as

FILTER,QUERY,VLOOKUP, andREGEXhelp identify duplicates, fix formats, and validate data in real time.OpenRefine: An open-source tool designed specifically for cleaning messy data, especially useful for exploring patterns and fixing inconsistencies in large datasets.

Python (Pandas): Pandas allows rule-based data cleaning, validation, and transformation, making it ideal for handling larger and more complex datasets.

ETL tools: Extract, Transform, Load (ETL) tools automate data cleaning as part of data pipelines, reducing manual effort and improving scalability.

These tools provide an opportunity to internally link related content on data automation, data preprocessing, or analytics workflows for better navigation and SEO.

FAQs – What Makes Manually Cleaning Data Challenging?

Why is manual data cleaning inefficient?

Manual data cleaning is inefficient because it relies on human effort for repetitive tasks. As data volume grows, the process becomes slow, time-consuming, and difficult to manage, leading to delays in analysis and reporting.

What are the risks of manual data cleaning?

The main risks include human errors, inconsistent cleaning rules, accidental data loss, and missing hidden issues in large datasets. These risks can result in inaccurate insights and poor decision-making.

Can manual data cleaning ensure data accuracy?

Manual data cleaning can improve accuracy for small datasets, but it cannot ensure consistent accuracy at scale. Human mistakes and lack of standardization make it unreliable for large or complex data.

Is manual data cleaning suitable for big data?

No, manual data cleaning is not suitable for big data. Large and continuously growing datasets require automated tools and systems to handle volume, speed, and complexity efficiently.

What happens if data is not cleaned properly?

If data is not cleaned properly, it can lead to incorrect reports, flawed analysis, biased machine learning models, and poor business decisions, ultimately affecting performance and trust in data.

Final Thoughts: Why Manual Data Cleaning Becomes a Bottleneck

Manual data cleaning becomes a bottleneck because it is slow, error-prone, and difficult to scale as data grows. While it may work for small datasets or one-time tasks, the challenges of time consumption, human errors, and lack of consistency make it unsuitable for modern data needs. This clearly explains what makes manually cleaning data challenging for businesses and analysts.

As organizations handle larger and more complex datasets, relying solely on manual methods limits efficiency and delays insights. Transitioning to automated or semi-automated workflows helps improve accuracy, maintain consistency, and support real-time data processing.

To move forward, consider exploring data cleaning tools, automation platforms, or best practices that reduce manual effort. You can also check related articles on data automation, analytics workflows, or AI-driven data processing to build a more reliable and scalable data strategy.